Source: https://code.ill.fr/relaxse/relaxse-code

installation:

- MPI+OMP version: /software/relaxse/2022.10/bin/

- OMP only version: /software/relaxse/2022.10-omp/bin/

Setup:

module load maxwell gcc/9.3 openmpi/3.1.6 ./configure --omp --mpi --gnu --build-type production cd build make -j 8 VERBOSE=1

Summary

- Single-node jobs almost always fail or take forever, except when running the omp-only binary

- Jobs on 2 nodes take forever

- Using N MPI-threads requires much more memory than using N OMP-threads

- Jobs on nodes with INTEL Gold-6140/Gold-6240 are much slower than jobs on nodes with EPYC 7543/75F3

- using 8 or more nodes has not so much impact on the runtime

- using more OMP threads than number of physical cores per node slows jobs down

- the temporary files produced by relaxse are huge, can easily reach a few hundred GB. Home-dir is certainly not suited.

- relaxse has a "mem reduce" option, which slightly reduces memory usage (~20%, see below), at a cost of increased runtime and i/o.

OMP option like OMP_PLACES=cores OMP_SCHEDULE="DYNAMIC,1" don't seem to have an impact

- SASS_MEM doesn't seem to be used at all. There is also no reference to SASS_MEM in the code.

Best option

- use 1 MPI-threads per node

- use as many OMP-threads as physical cores: $(( $(nproc) / 2 ))

- use EPYC 75F3 or EPYC 7543

- use 4-6 nodes per job

- use BeeGFS or better GPFS as working directory

- see sample-script below

Test runs

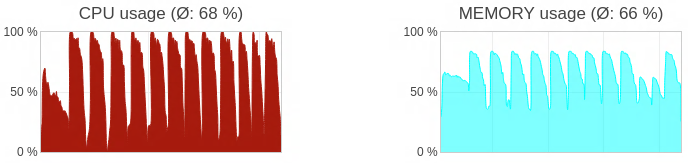

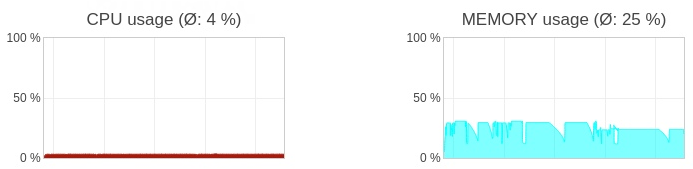

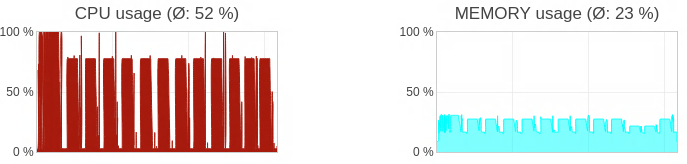

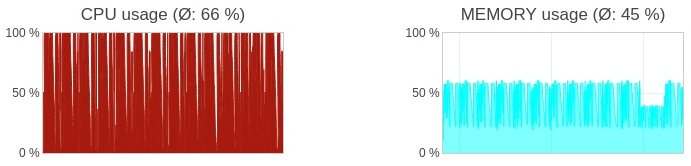

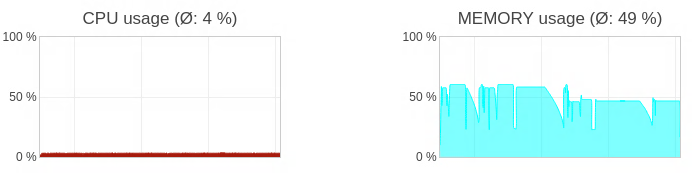

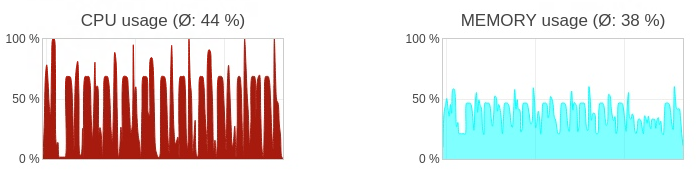

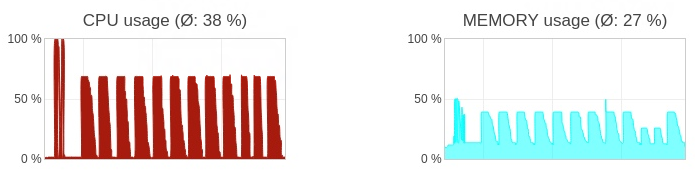

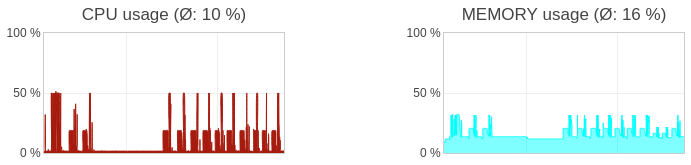

Several test runs are not listed, as they failed, ran into timeout, exceeded the memory limit etc. The plots show CPU and memory consumption of the master node in the job. To watch your own jobs: https://max-portal.desy.de/webjobs/ (DESY network only). For completed jobs use j<jobid> in the Username field.

| CPU | Memory | #cores | #nodes | #mpi/node | #omp/node | memreduce | runtime | state | jobid | comment |

|---|---|---|---|---|---|---|---|---|---|---|

| EPYC 7643 | 1TB | 128 | 1 | 24 | 1 | yes | 65:25:35 | ok | 13143778 | |

| EPYC 7643 | 1TB | 128 | 1 | 24 | 4 | yes | 25:59:00 | ok | 13144152 | |

| EPYC 7643 | 1TB | 128 | 3 | 1 | 64 | no | 15:14:52 | ok | 13149586 | |

| EPYC 7643 | 1TB | 128 | 1 | 1 | 64 | no | time | 13154408 | ||

| Gold-6240 | 768G | 36 | 4 | 1 | 36 | no | 29:01:01 | ok | 13151534 | WD: GPFS |

| Gold-6240 | 768G | 36 | 4 | 1 | 36 | no | 29:01:39 | ok | 13162462 | WD: BeeGFS |

| Gold-6240 | 768G | 36 | 6 | 1 | 36 | no | 18:56:40 | ok | 13162464 | |

| Gold-6240 | 768G | 36 | 8 | 1 | 36 | no | 14:52:48 | ok | 13162463 | |

| EPYC 75F3 | 512G | 64 | 1 | 1 | 64 | no | 44:00:00 | time | 13157512 | mpi+omp binary |

| EPYC 75F3 | 512G | 64 | 1 | 1 | 64 | no | 62:00:00 | ok | 13165664 | omp binary |

| EPYC 75F3 | 512G | 64 | 2 | 1 | 64 | no | 44:00:00 | time | 13157511 | |

| EPYC 75F3 | 512G | 64 | 3 | 1 | 64 | no | 16:11:12 | ok | 13157510 | |

| EPYC 7543 | 1TB | 64 | 3 | 1 | 64 | no | 15:29:05 | ok | 13149708 | |

| EPYC 75F3 | 512G | 64 | 4 | 1 | 64 | no | 12:45:28 | ok | 13154402 | |

| EPYC 75F3 | 512G | 64 | 6 | 1 | 64 | no | 09:34:38 | ok | 13157773 | |

| EPYC 75F3 | 512G | 64 | 6 | 1 | 64 | yes | 10:23:11 | ok | 13262741 | |

| EPYC 75F3 | 512G | 64 | 6 | 1 | 32 | yes | 15:48:41 | ok | 13273419 | |

| EPYC 75F3 | 512G | 64 | 6 | 1 | 128 | yes | >13h | slow | 13262950 | |

| EPYC 75F3 | 512G | 64 | 8 | 1 | 64 | no | 08:37:10 | ok | 13159577 | |

| EPYC 75F3 | 512G | 64 | 2 | 1 | 64 | no | 44:00:00 | time | 13157511 | |