we get occasional complaints about conda being very slow when launched from BeeGFS. That's unfortunately a known and hard to avoid problem. An conda session can easily use a 5-10.000 open files, which becomes particularly problematic for concurrently running processes. BeeGFS doesn't have a dedicated memory cache (GPFS does), and hence suffers much more from concurrent access to lots of files.

One possibility to overcome the issue is the containerization of conda installations. A singularity image is - from filesystems perspective - a single file. Loading a 1GB singularity blob - instead of lots of files a few kB each - has a slight overhead, but helps when running on BeeGFS.

Making some quick tests on filesystems available on Maxwell

- /home: that's a GPFS based filesystem

- /software: that's a GPFS based filesystem

- /beegfs/desy: obviously BeeGFS hosted

- /pnfs/desy.de: dCache.

- local: for comparison a few runs were done on a local disk

Test-runs used two different installation mechanisms:

- Make a plain conda installation including tensorflow

- Create a Centos_7 singularity image file containing the same conda+tensorflow installation

On dCache the plain conda installation never succeeded (nfs suffering). For concurrent processes it's not very well suited and hence has been omitted from almost all plots.

A very simple tensorflow application was used as a benchmark. Variation in compute time is marginal, so pretty much all variations in runtime originate from loading the python modules/singularity image.

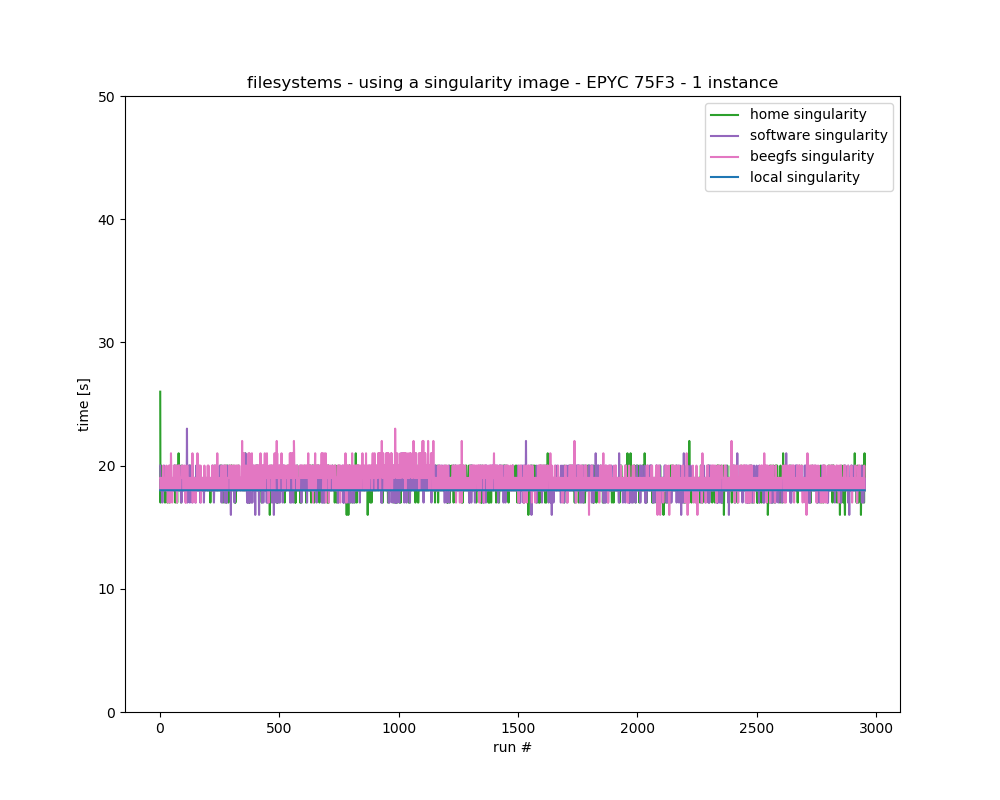

Comparing filesystems

This test was running on EPYC 75F3 hardware using singularity images as basis. A singularity image was deposited on each of the filesystems. Only one instance was executed at a time. All runs are continuous on the same node. | This test was running on EPYC 75F3 hardware using plainiconda installations, independent for each filesystem. Only one instance was executed at a time. All runs are continuous on the same node. |

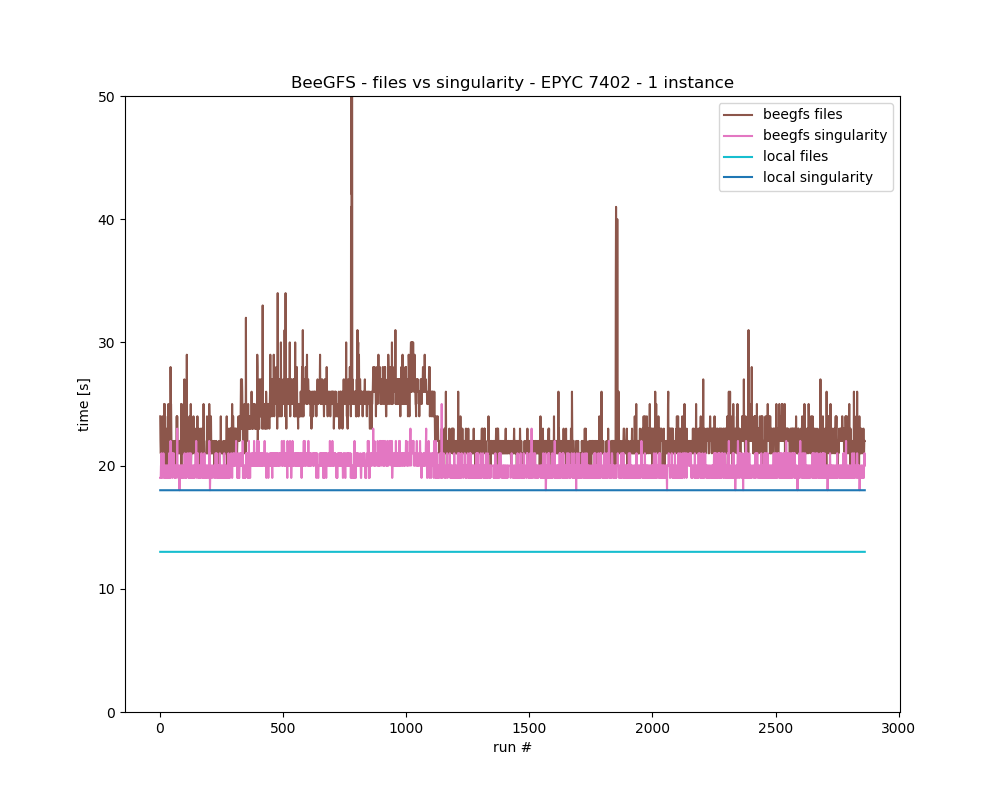

This test was running on EPYC 7402 hardware using singularity images as basis. I've deposited a singularity image on each of the filesystems, and ran the tensorflow test entirely on that filesystem. Only one instance was executed at a time. All runs are continuous on the same node. Not much of a difference across filesystems when using singularity images... | This test was running on EPYC 7402 hardware using plain conda installations, independent for each filesystem. Only one instance was executed at a time. All runs are continuous on the same node. BeeGFS is clearly much more suffering ... |

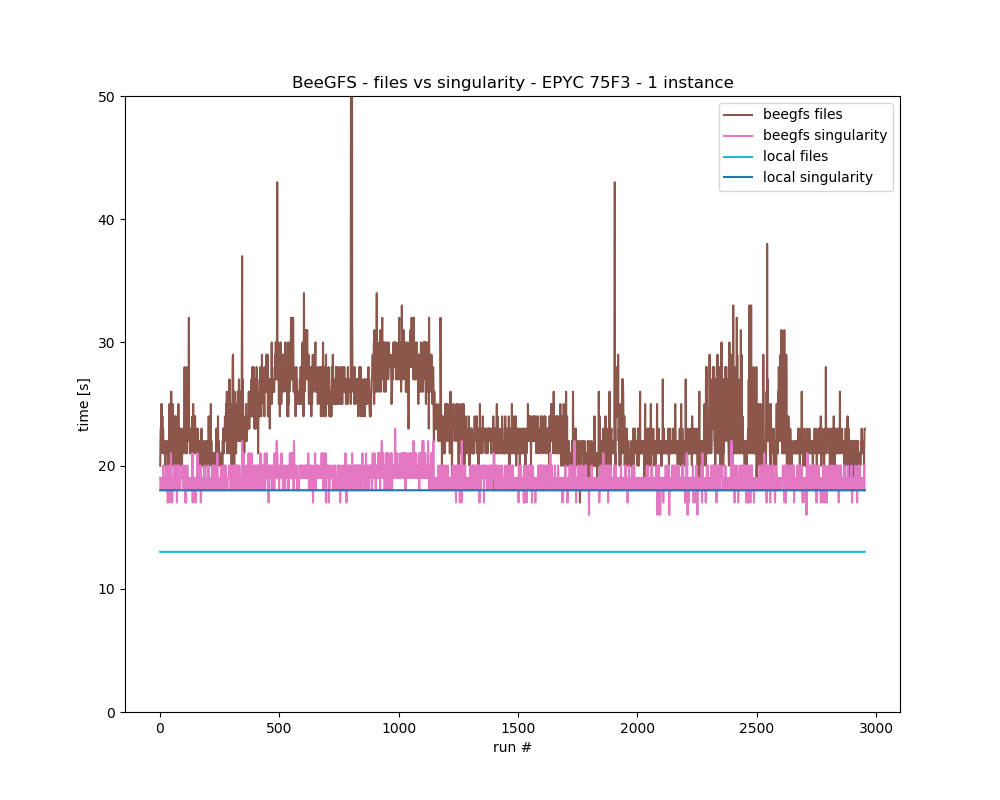

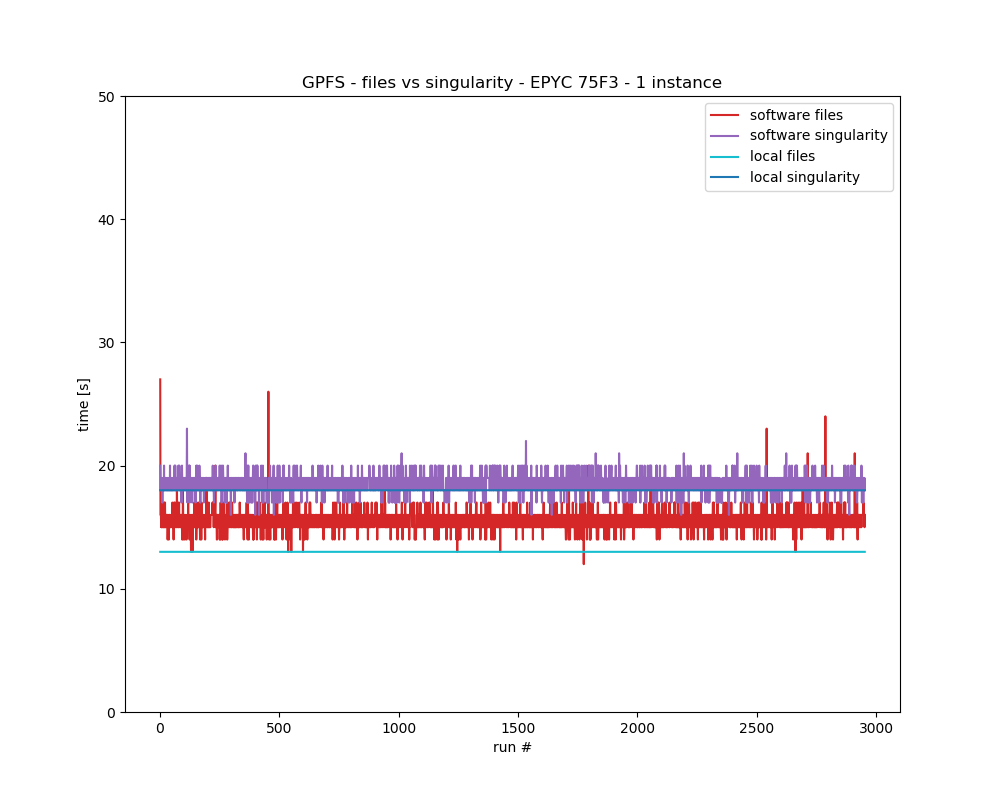

Singularity based installation vs plain filesystem installation

This test was running on EPYC 75F3 hardware using BeeGFS installations. The setup is exactly as above. In case of BeeGFS, a singularity image performs much better. | This test was running on EPYC 75F3 hardware using GPFS installations. The setup is exactly as above. In case of GPFS the plain installation is usually a little faster, but less subject to variation in runtime. |

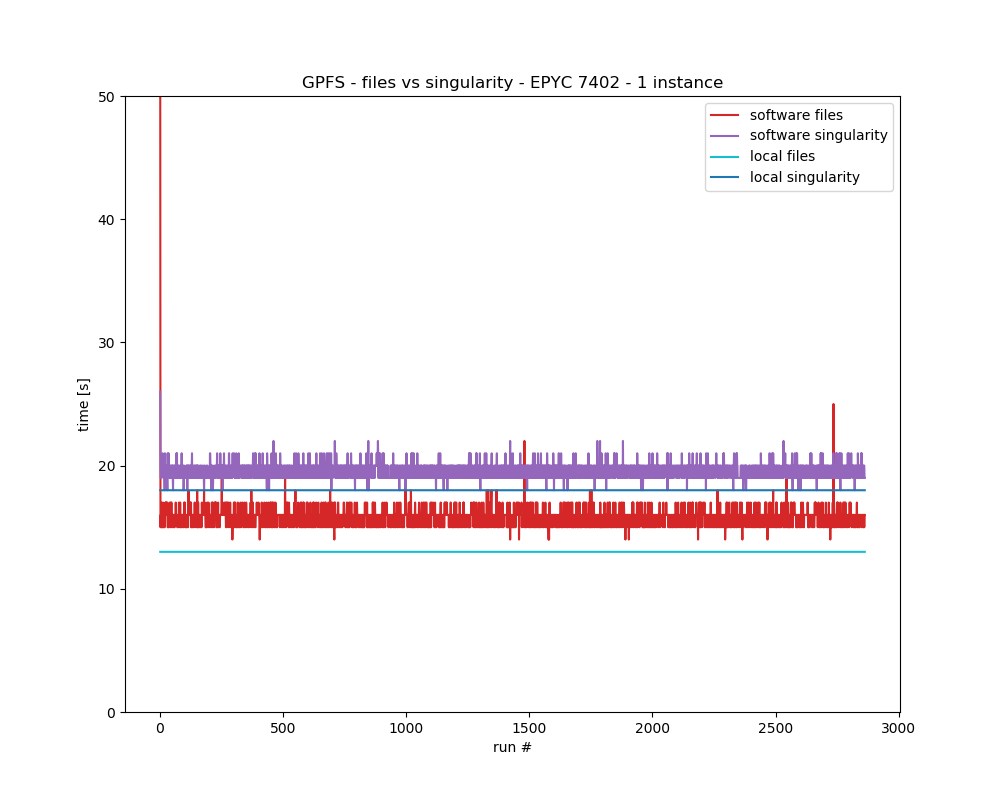

This test was running on EPYC 7402 hardware using BeeGFS installations. | This test was running on EPYC 7402 hardware using GPFS installations. |

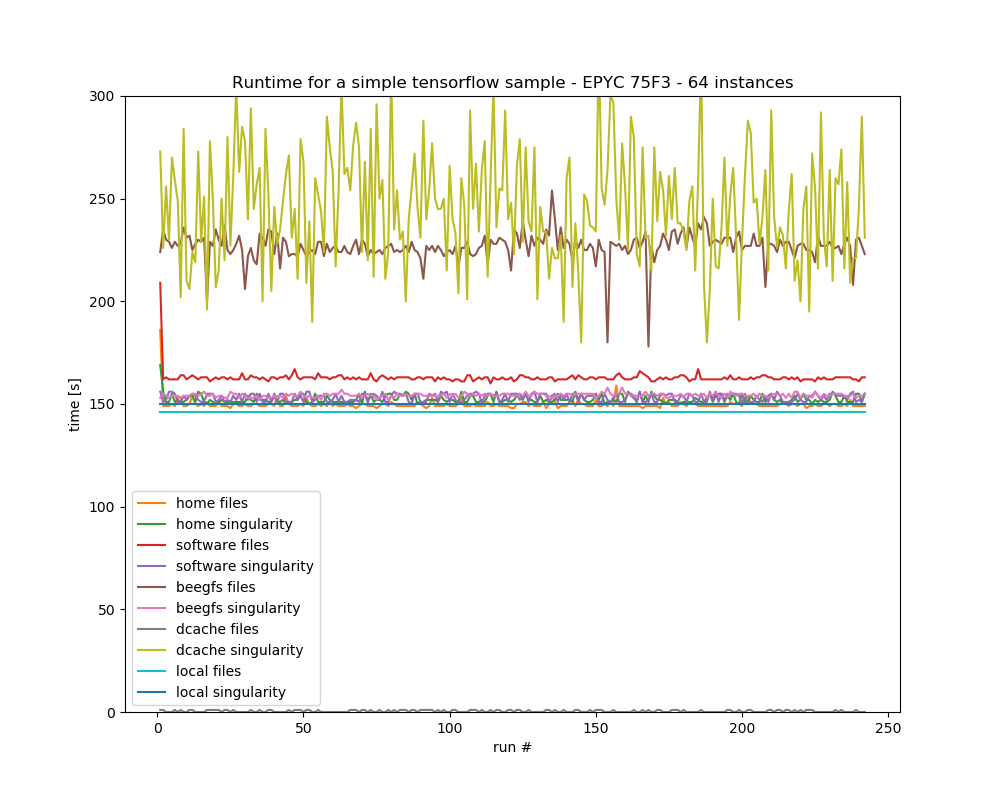

Running instances in parallel

using the same setup as above but running 64 instances in parallel.

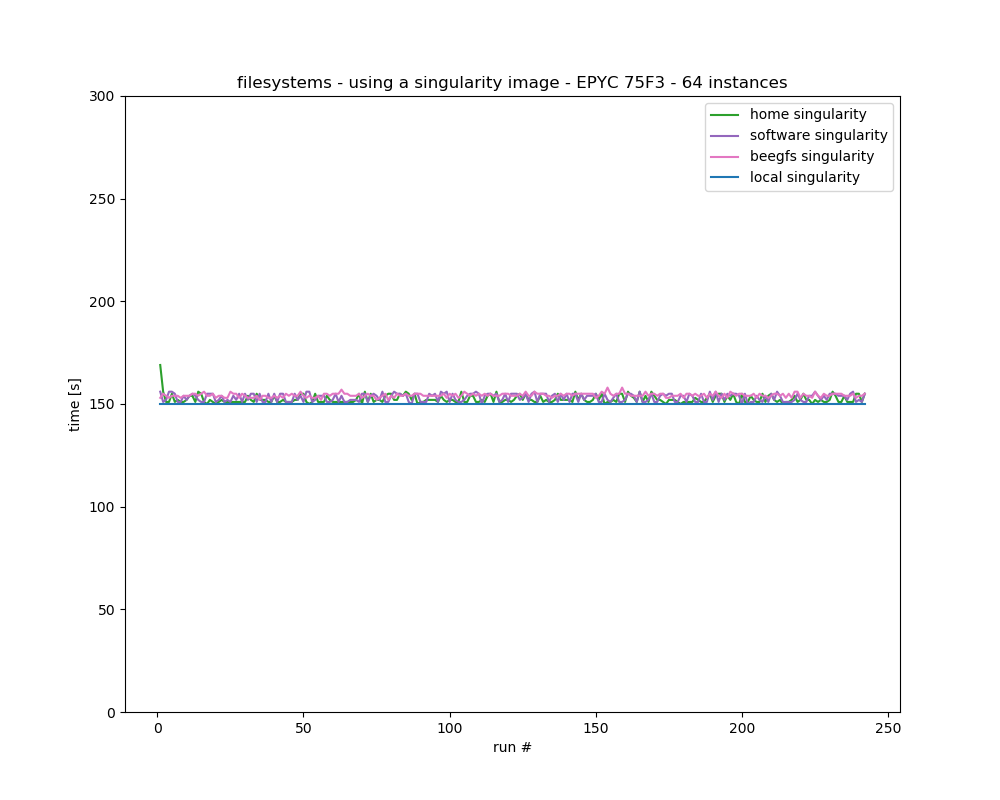

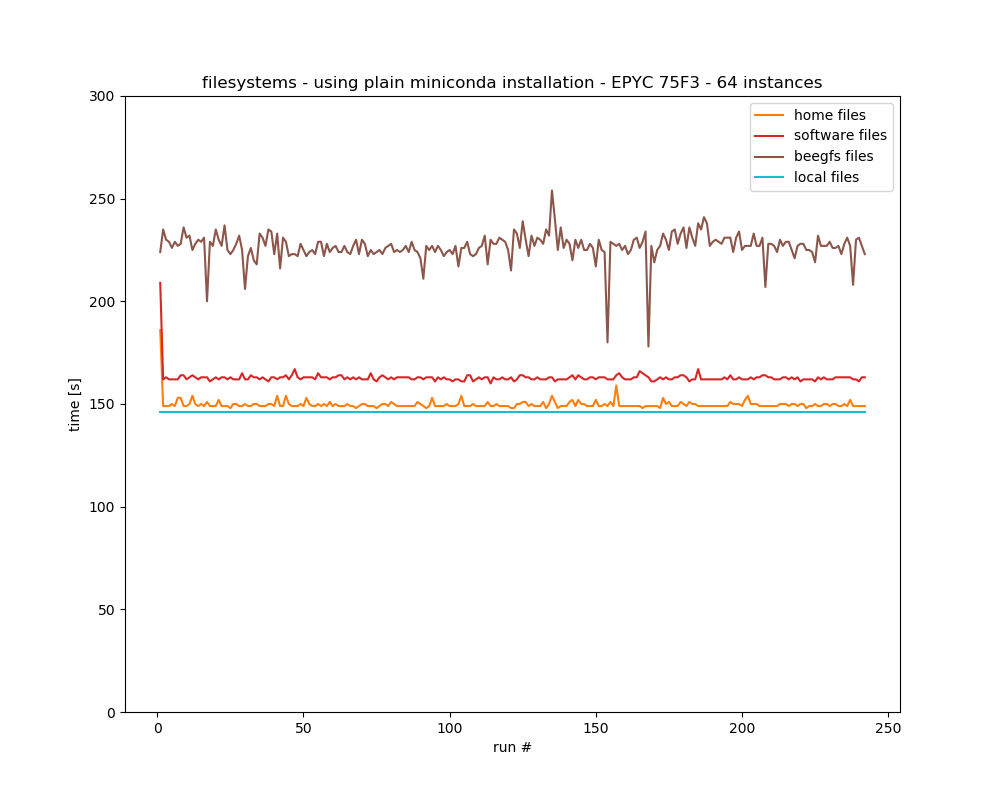

This test was running on EPYC 75F3 hardware using singularity images as basis. I've deposited a singularity image on each of the filesystems, and ran the tensorflow test entirely on that filesystem. 64 instances were running concurrently all accessing the same image. All runs are continuous on the same node. Not much of a difference across filesystems when using singularity images ... | This test was running on EPYC 75F3 hardware using plain conda installations, independent for each filesystem. 64 instances were running concurrently all accessing the same image. All runs are continuous on the same node. BeeGFS suffers is getting real slow .. |

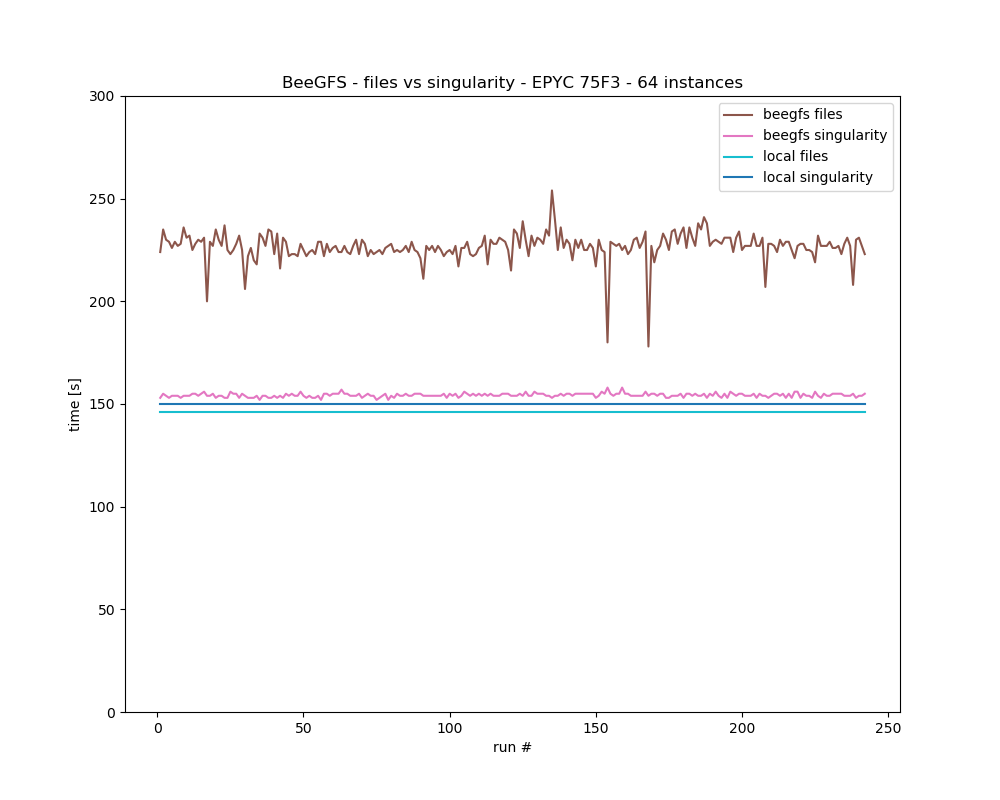

This test was running on EPYC 75F3 hardware using BeeGFS installations. The setup is exactly as above. Using singularity images is much faster for GPFS when running many instances concurrently.... | This test was running on EPYC 75F3 hardware using GPFS installations. The setup is exactly as above. Singularity is also slightly faster than plain conda in case of BeeGFS, but usually not worth the effort. |

This test was running on EPYC 75F3 hardware using BeeGFS installations. The setup is exactly as above.It shows all different variations - for 64 concurrent instances - in one plot. | Legend:

|