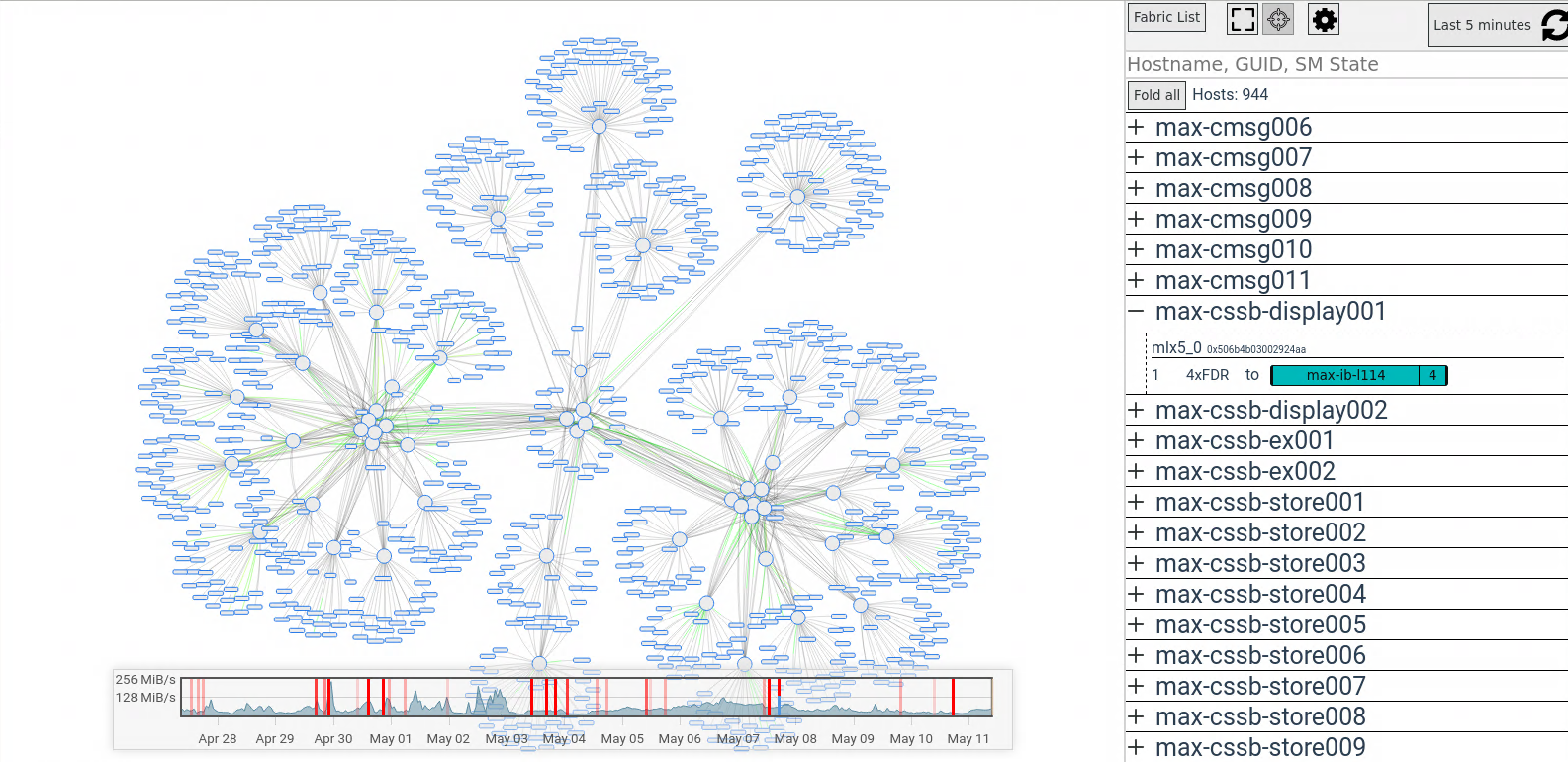

The Maxwell uses an infiniband network for communication between hosts and for access to cluster filesystems GPFS and BeeGFS. The main benefit of infiniband is the much lower latency, ethernet has typically 5 times the latency of infiniband. This is particularly crucial for applications with heavy interprocess communication for example MPI.

The infiniband structure of the Maxwell cluster is - like the cluster itself - continuously growing over time, and similarly heterogeneous. The IB topology falls hence into "older" and "newer" sub-clusters with slightly different hardware and configuration.

The older part of the cluster uses a 3-layered topology:

- root switches with HDR (200Gb/s)

- top switches with EDR (100Gb/s)

- leaf switches with HDR, EDR and partially FDR (56Gb/s)

The newer part of the cluster utilizes a 2-layered topolgy:

- root switches with HDR (200Gb/s)

- leaf switches with HDR (200Gb/s) and partially EDR (100Gb/s)

The compute nodes are exclusively attached to leaf switches. Most of the newer compute nodes support HDR speed. Due the topology of the cluster, the compute nodes are connected with "split-cables", where two nodes share one port on a HDR leaf-switch, so the effective uplink speed on compute nodes is ≤100Gb/s. GPFS storage nodes can utilize the full HDR speed.