Sources: https://www.lumerical.com/

License: ANSYS license terms apply to Lumerical. See also /software/lumerical/2021/license.txt on maxwell.

Documentation: https://www.lumerical.com/learn/

Licenses: concurrent licenses served by 1055@adserv204.desy.de, only accessible inside DESY network

License availability: my-licenses -p ansys | egrep 'lum|Overview'

The ansys license server holds a rather small number of lumerical GUI licenses, so don't count on availability of licenses. Batch jobs do not necessarily need the GUI licenses and would run even if all GUI licenses are in use. Note: the python interface of Lumerical needs a GUI license in any case.

Using lumerical

Basic lumerical installations are available on maxwell at /software/lumerical/<version>. You can load a module - module load maxwell lumerical - or launch applications directly from /software/lumerical/<version>/bin/, e.g. /software/lumerical/2022/bin/mode-solutions.

module load maxwell lumerical # loads the newest version, e.g. module load maxwell lumerical/2021 to set a specific version lumerical # starts the launcher

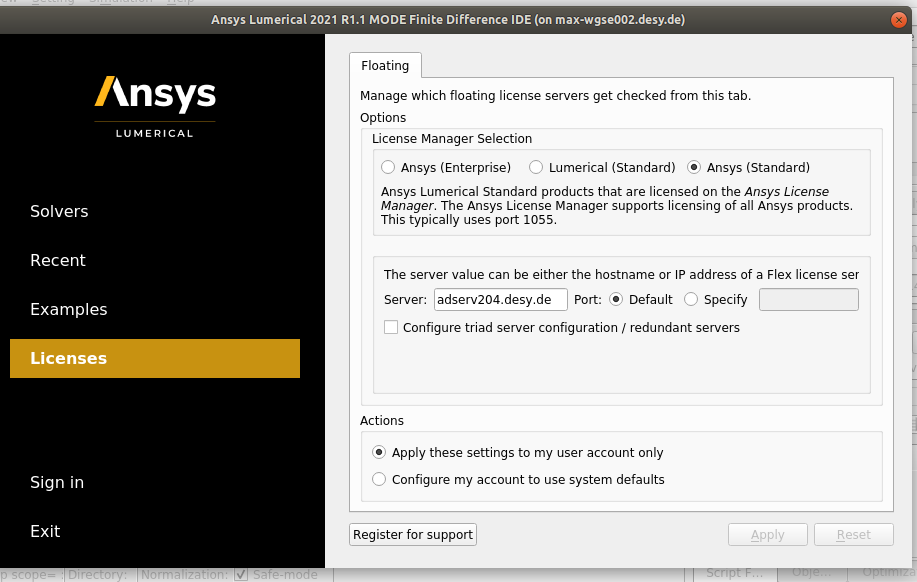

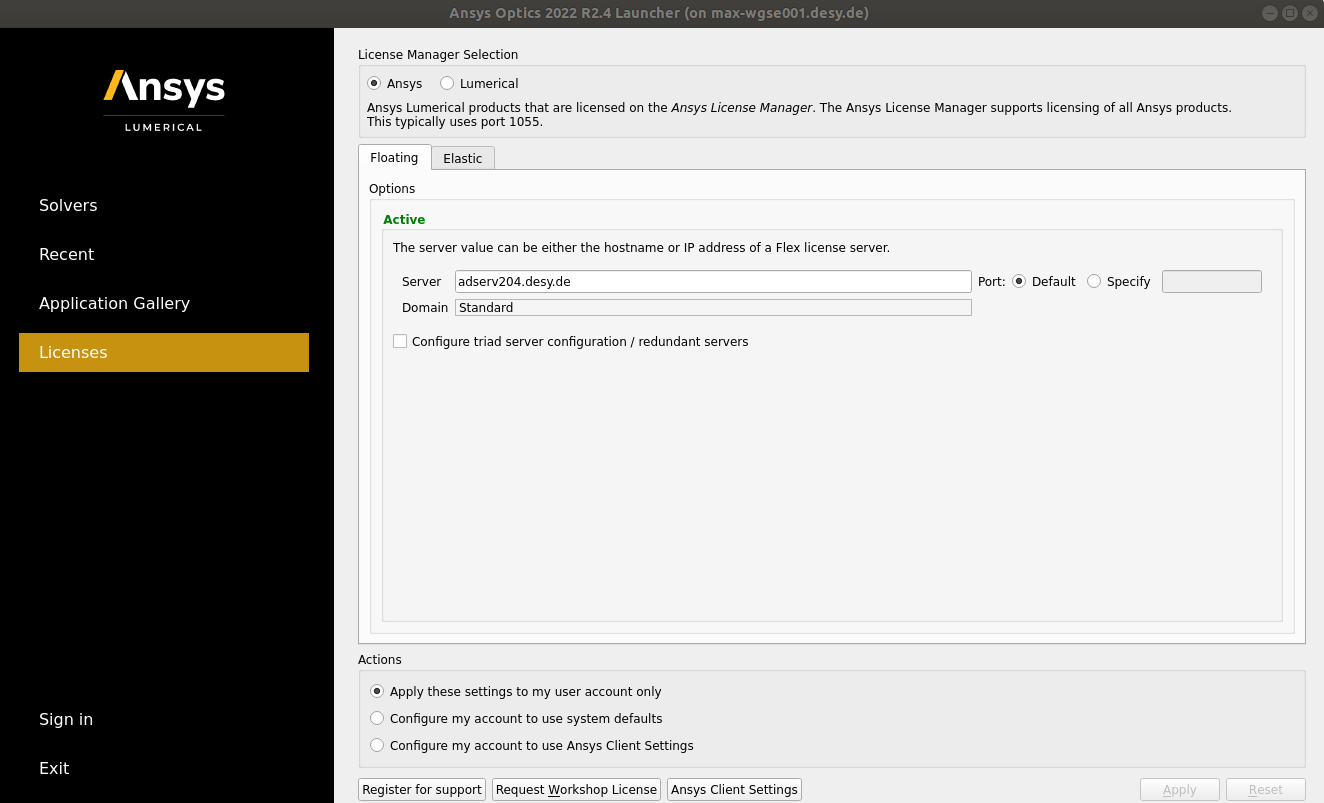

The lumerical module also creates a file ~/.config/Lumerical/License.ini if it doesn't exist. If lumerical nevertheless asks for a license server configuration, set "Ansys (Standard)" with adserv204.desy.de as licenses server using the default port (or 1055) as shown in the screenshot:

Lumerical in batch

Running a batch-job on maxwell is basically very simple. A sample script could look like this:

#!/bin/bash

#SBATCH --partition=short

#SBATCH --time=0-02:00

#SBATCH --job-name=lumerical

#SBATCH --nodes=1

unset LD_PRELOAD

source /etc/profile.d/modules.sh

# number of physical cores per node

N=$(( $(nproc) / 2 ))

#

# this is just an example!

# when use_mpich=0, lumerical will use the MPI implementation shipping with lumerical

# otherwise it's using an openmpi variant available on Maxwell.

# the latter has better performance at least on EPYC nodes.

#

use_mpich=0

if [[ $use_mpich -eq 0 ]]; then

/software/lumerical/2021/mpich2/nemesis/bin/mpiexec -ppn $N /software/lumerical/2021/bin/fdtd-engine-mpich2nem -t 1 $PWD/fdtd_grating_trial.fsp

else

module load maxwell gcc/9.3 openmpi/3.1.6

mpirun -N $N --mca pml ucx --mca mpi_cuda_support 0 /software/lumerical/2021/bin/fdtd-engine-ompi-lcl -t 1 $PWD/fdtd_grating_trial.fsp

fi

submit the script from the folder containing the lumerical input-file (fdtd_grating_trial.fsp in the sample) with

sbatch lumerical.sh

(assuming that the script was named lumerical.sh).

SLURM integration

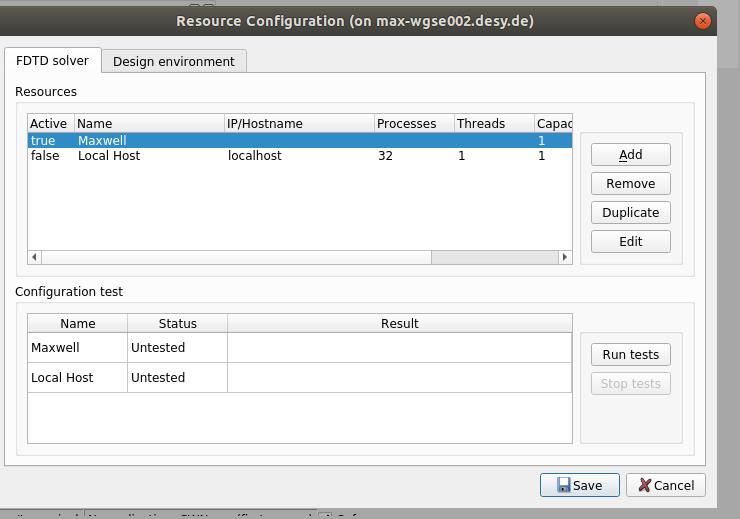

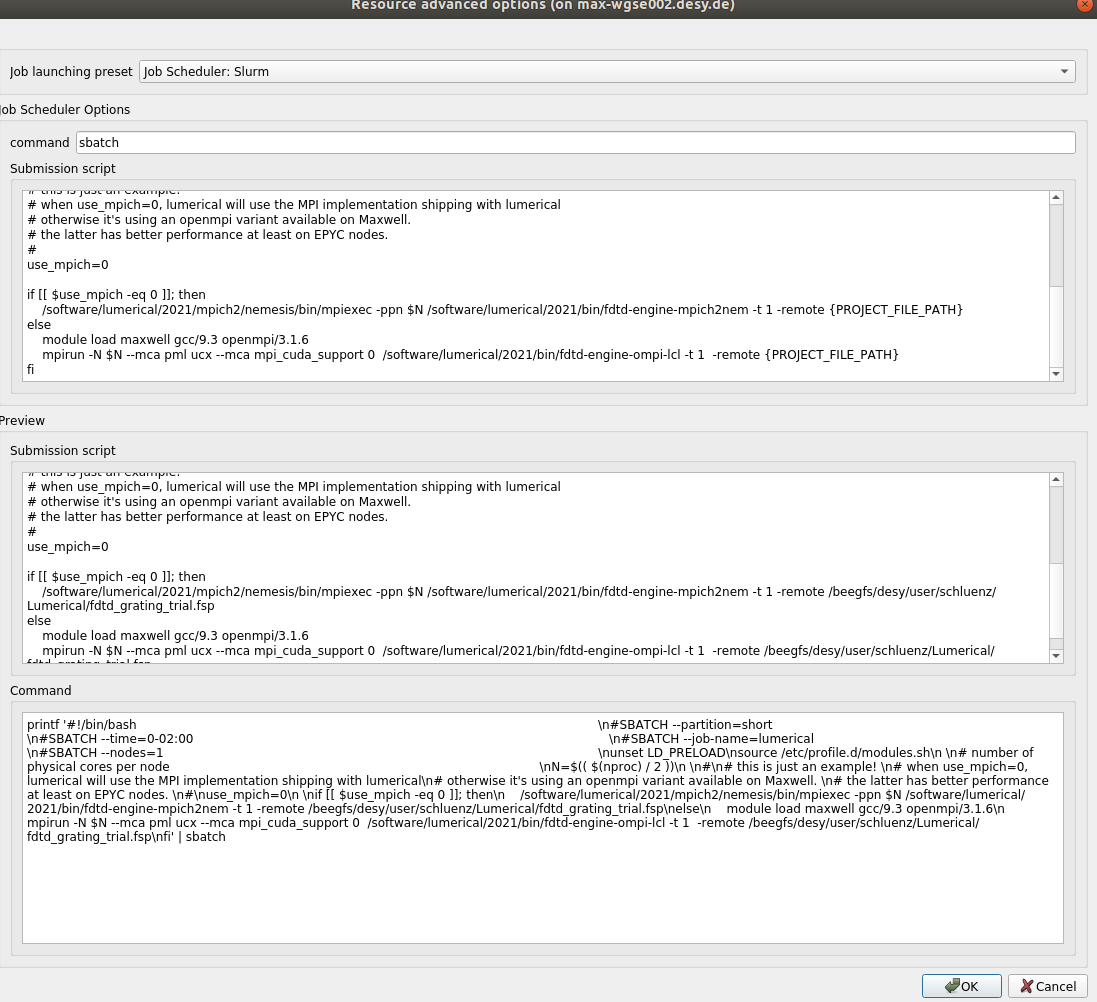

The snapshots show a "working" sample configuration.

Note: it will only work out-of-the box on maxwell submission hosts (e.g. max-display) and you might need to change the partition to one you are allowed to use (command my-partitions will tell)